Intro

The bulk of Supek's processing power is based on HPE Cray EX technology. This technology is based on the Shasta architecture and includes a number of innovative features that are intended for use in very large and demanding computing applications, including simulations, modeling, research, genetics and other scientific and business applications. One of the key features of Supek is their state-of-the-art interconnect system, which uses the Cray Slingshot network. This network enables the rapid exchange of data between nodes, which is essential for performing computer operations very quickly. In addition, Supek has a highly efficient cooling system, which enables the maintenance of an optimal temperature within the computer system, which ensures top performance even in the most demanding operating conditions. This type of cooling enables efficient heat removal with water through a system of pipes and heat exchangers, the so-called cooling distribution unit (CDU). The Supek supercomputer consists of several components that are typically found on computer clusters:

- Access - Servers intended for user interaction with the entire cluster

- Processors - The supercomputer part that contains the processor cores

- Data repository - A central repository that is accessible to the entire network

- Interconnection - A high efficiency network that connects all parts

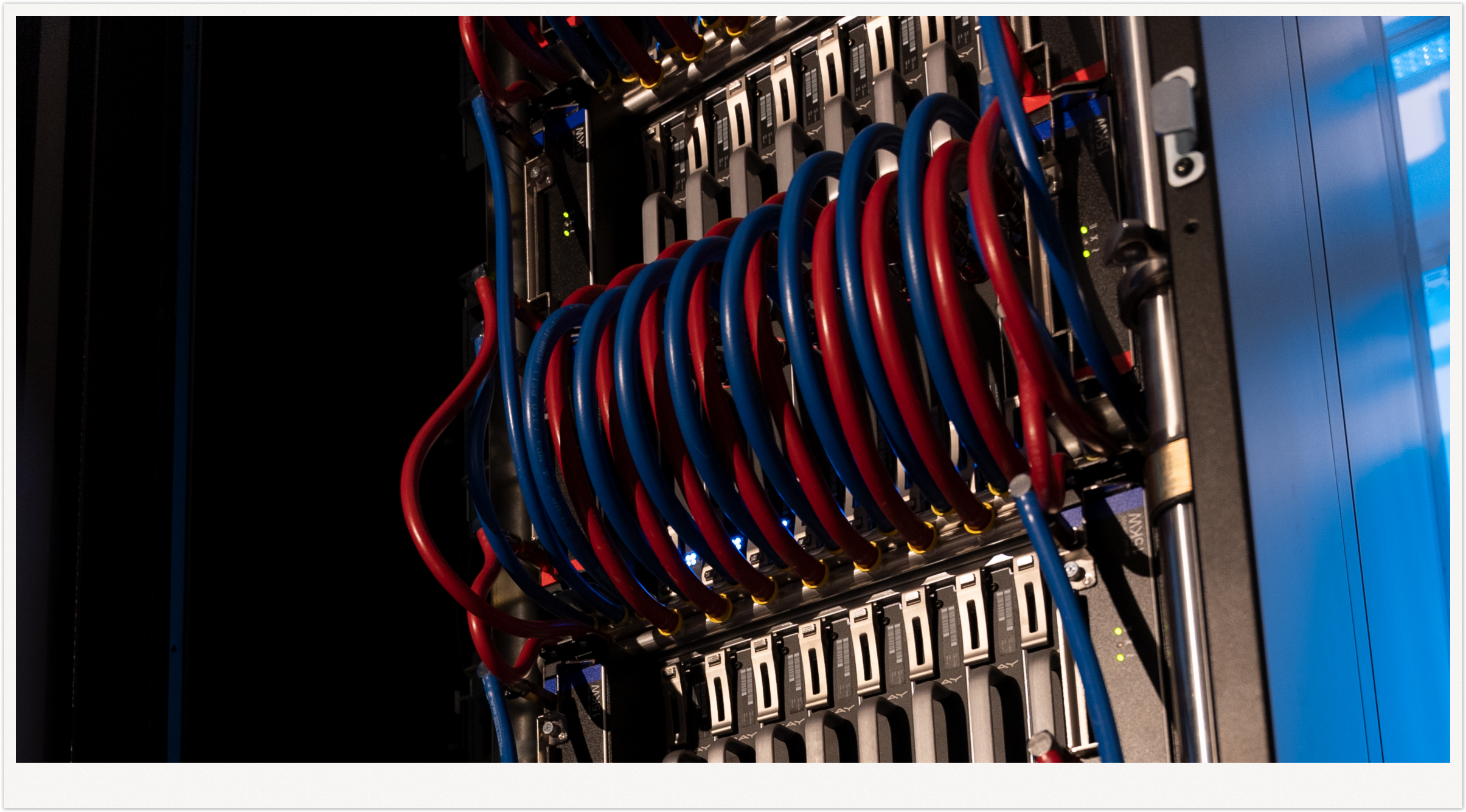

Heat removal with water through a pipe system

Processors

All servers on the Supek cluster contain one or two AMD EPYC 7763 CPUs.

AMD EPYC 7763 is part of the Epyc 7003 Milan processor series developed by AMD, built on the Zen 3 architecture, which enables better energy efficiency and increases overall performance compared to previous generations. The processor is built on 7-nanometer production technology, which makes it very efficient in energy consumption and thermal management. The AMD EPYC 7763 processor also features dynamic power adjustment capabilities that adjust the processor's operating power to optimize energy efficiency. This is useful for server environments that need optimal processing power but also want to reduce power consumption.

The processor consists of 64 cores, and the cores are fully compatible with the X86-64 architecture and support AVX2 256-bit vector instructions with a maximum bandwidth of 16 "double precision" FLOPs/clock (AVX2 FMA operations) or a total of 2.5 of teraFLOPS at a base operating clock of 2.45 GHz per processor.

The specifications of the AMD EPYC 7763 processor are as follows:

- Number of cores: 64

- Number of companies: 128

- Base clock: 2.45 GHz

- Maximum clock: 3.5 GHz

- Cache memory: L3 - 256 MB, L2 - 512 kB, L1 - 32 kB

- TDP: 280 W

- It supports DDR4 memory modules up to 3200 MHz

- Supports up to eight channels of DDR4 memory

- PCIe version: 4.0

On the Supek computer cluster, there are a total of 80 NVIDIA A100 40GB GPUs in SXM version on working servers and one GPU of the same series in PCI version on the access server.

NVIDIA A100 40GB is a graphics card that is specially designed for performing demanding computing operations, such as scientific computing, machine learning and high performance computing. Thanks to its Ampere architecture, the NVIDIA A100 40GB provides improved data processing and performance compared to previous NVIDIA graphics cards. Its specification includes:

- Architecture: Ampere

- Processor: NVIDIA A100 Tensor Core GPU

- Number of CUDA cores: 6,912; various instance sizes up to 7 MIG @ 5GB

- Number of Tensor cores: 432

- Memory: 40 GB

- Memory type: HBM2

- Bus: 5120 bit

- Bandwidth: 1555 GB/s

- TDP: 500W (2000W)

The NVIDIA A100 Tensor Core GPU processor consists of 6,912 CUDA cores and 432 Tensor cores. The difference between CUDA and Tensor cores can be seen in their primary function. CUDA cores are used to run a wide range of algorithms in parallel for image processing, scientific computing, and many other applications that can be parallelized. Tensor cores are special cores used for tensor processing. These cores help perform complex mathematical operations quickly, which is critical for performing demanding machine learning operations.

The total memory capacity of the graphics card is 40GB. This amount of memory enables fast storage of large amounts of data used in demanding computer applications. This means that users can process large amounts of data and reduce the time required to perform computer operations.

Nodes

The specific implementation that makes up Supek is the HPE Cray EX2500 and consists of several servers:

| Purpose | Number | CPU | GPU | RAM (GB) |

|---|---|---|---|---|

| CPU access node | 1 | 2 x AMD EPYC 7763 | - | 256 |

| GPU access node | 1 | 1 x AMD EPYC 7763 | 1 x NVIDIA A100 (PCI) | 128 |

| CPU work node | 52 | 2 x AMD EPYC 7763 | - | 256 |

| GPU work node | 20 | 1 X AMD EPYC 7763 | 4 X NVIDIA A100 (SXM) | 512 |

| Big memory nodes | 2 | 2 X AMD EPYC 7763 | - | 4096 |

Access nodes

There are 2 access servers/nodes available on the Supek cluster.

The first access node HPE Proliant DL385 Gen10 Plus v2 (CPU access server) does not contain a graphics processor but contains 2 AMD EPYC 7763 processors with 64 cores (128 cores in total), motherboards with 8 memory slots per processor with a maximum transfer speed of 3200MT/s and a total 16 DDR4 memory modules of 16GB working memory (256GB total). The server is equipped with one local NVMe SSD with a capacity of 1.92 TB and with additional 2 NVMe SSDs with a capacity of 7.68 TB each.

The second access node (GPU access server) is an HPE Apollo 6500 Gen10 Plus system with an HPE Proliant XL645d Gen10 Plus node. The server is equipped with one NVIDIA A100 graphics processor in PCI version and one AMD EPYC 7763 processor with 64 processor cores. The server motherboard contains 8 memory slots per processor and supports a transfer rate of 3200MT/s. The server is equipped with 8 DDR4 memory modules with a capacity of 16GB of working memory (128 GB in total). The server is equipped with one local NVMe SSD with a capacity of 1.92 TB and with additional 2 NVMe SSDs with a capacity of 7.68 TB each.

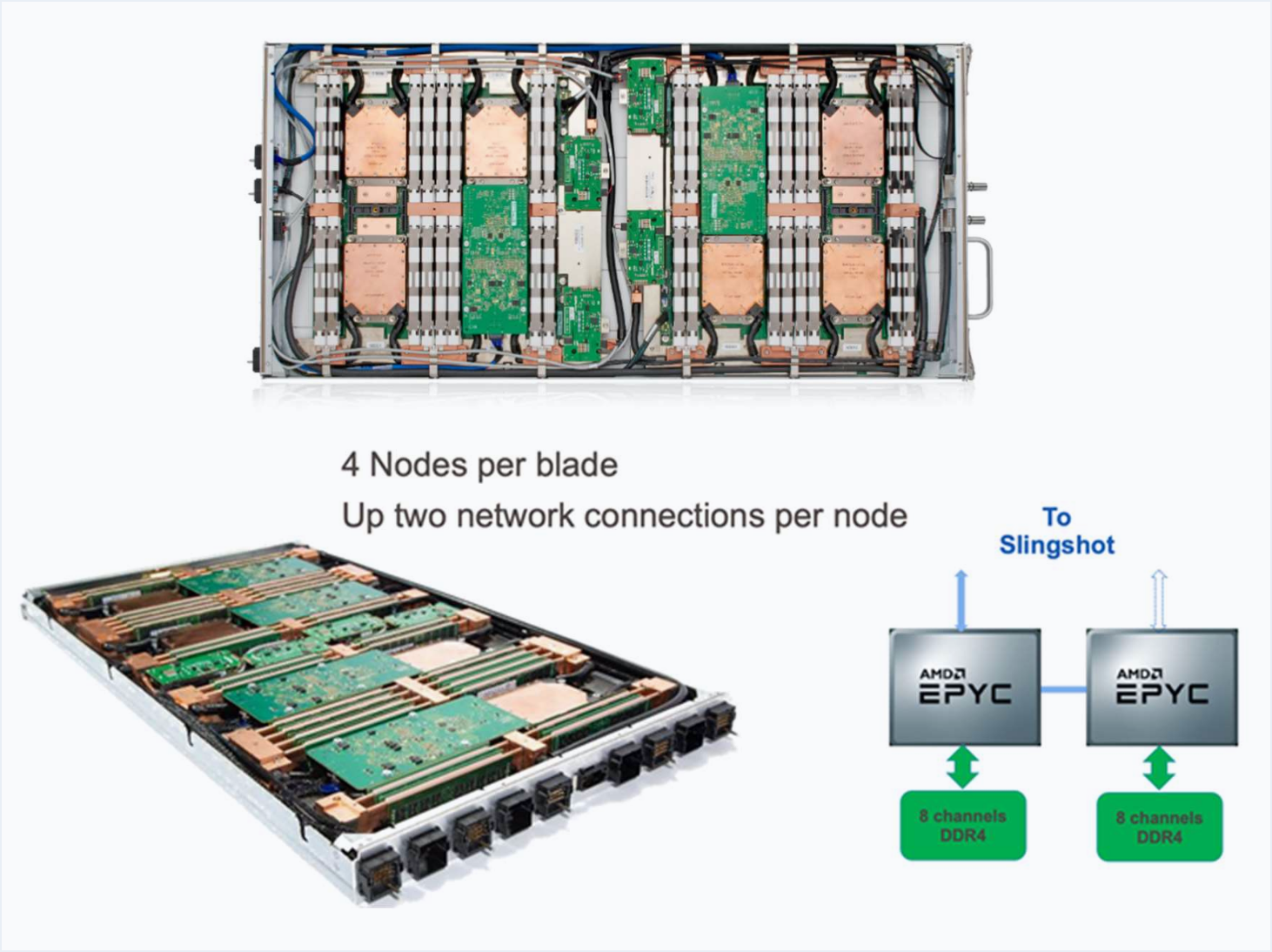

CPU work node

HPE Cray EX425 blades are used to accommodate processing computer nodes or servers. Each blade contains 4 computing servers. The Supek cluster contains a total of 52 CPU servers located in 13 computer blades.

Blade HPE Cray EX425

Each CPU server consists of 2 AMD EPYC 7763 processors with 64 cores (128 cores per server or 6656 on all CPU servers), a motherboard with 8 memory slots per processor with a maximum transfer speed of 3200MT/s and a total of 16 DDR4 memory modules of 16GB working capacity memory (256GB total).

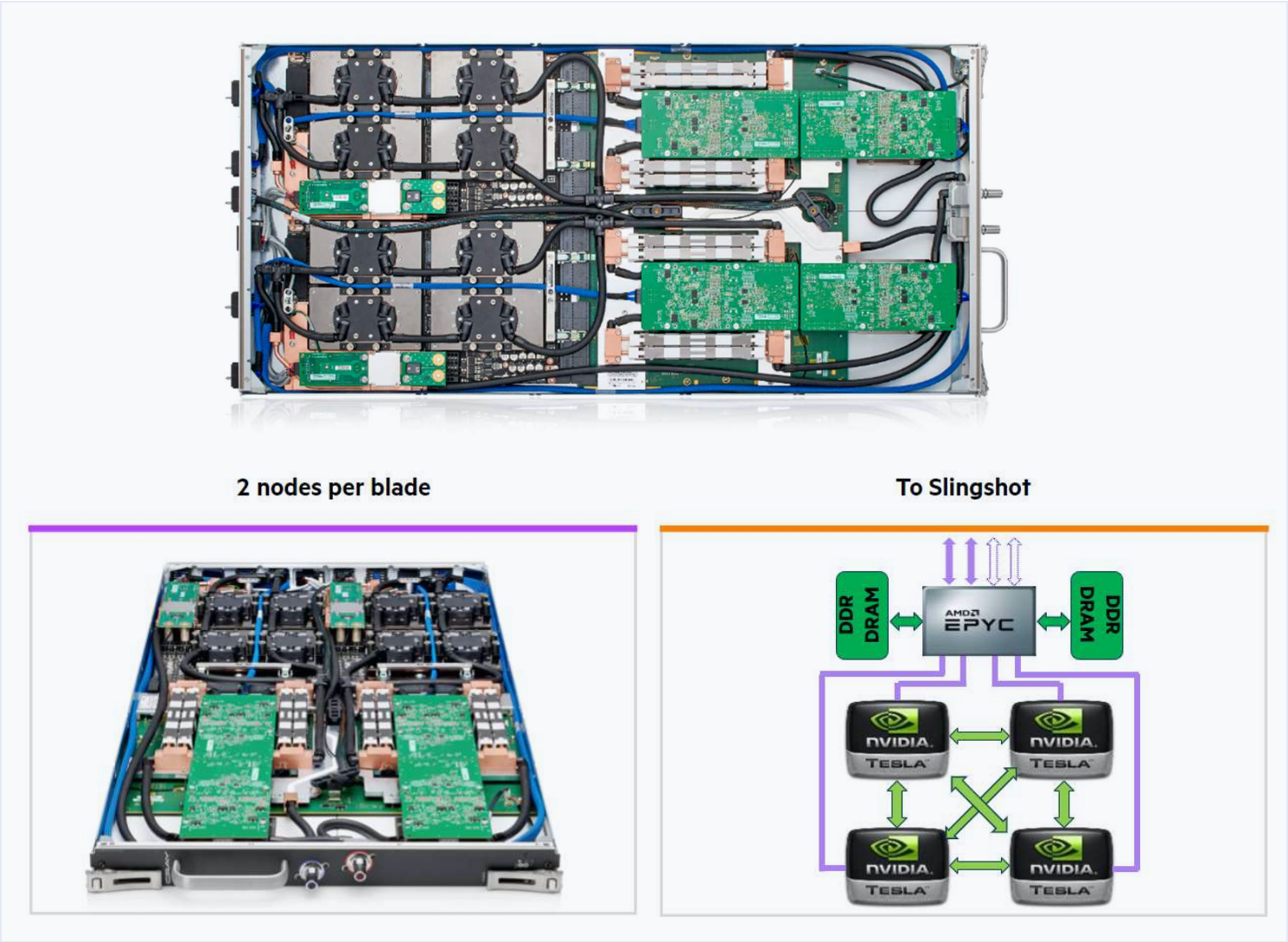

GPU work node

HPE Cray EX235n blades contain two GPU servers each. There are a total of 20 GPU servers in 10 computing blades on the cluster.

Blade GPE Cray EX235

Each node contains 1 AMD EPYC 7763 processor with 64 cores and 4 nVidia HGX A100 graphics processors in SXM version with 40 GB of built-in memory. GPU servers are equipped with a motherboard with 8 memory slots per processor with a maximum transfer speed of 3200MT/s and with 8 DDR4 memory modules with a capacity of 64GB of working memory (512GB in total).

Big memory nodes

Supek contains 2 HPE Proliant DL385 Gen 10 Plus v2 servers. Each of the servers is equipped with 2 AMD EPYC 7763 processors. The server motherboard contains 16 memory slots per processor and supports a transfer speed of 3200MT/s. 32 DDR4 memory modules with a capacity of 128GB of working memory provide each node with a total of 4096 GB of working memory. The server is equipped with a local NVMe SSD with a capacity of 1.92 TB

Interconnection

The processor and storage part of the Supek supercomputer are connected by the latest version of Cray's Slingshot interconnect, intended for typical workloads in HPC environments focused on processing and exchanging large amounts of data.

The main item in Slingshot's performance is the speed of 200 Gbps which, combined with the 64 ports available on each switch and the Dragonfly network topology, achieves exceptional transfer speeds.

In addition to high performance at the hardware level, the Slingshot interconnect optimizes network data transmission with adaptive packet routing (taking into account the load on other switches) and congestion control (by increasing or decreasing the bandwidth of traffic-generating servers).

Dragonfly network topology: Nodes (circles) are via switches (squares) connected in local groups (via electrical signals),

and groups of nodes via of global connections (optical cables) to the network (Source)

Database

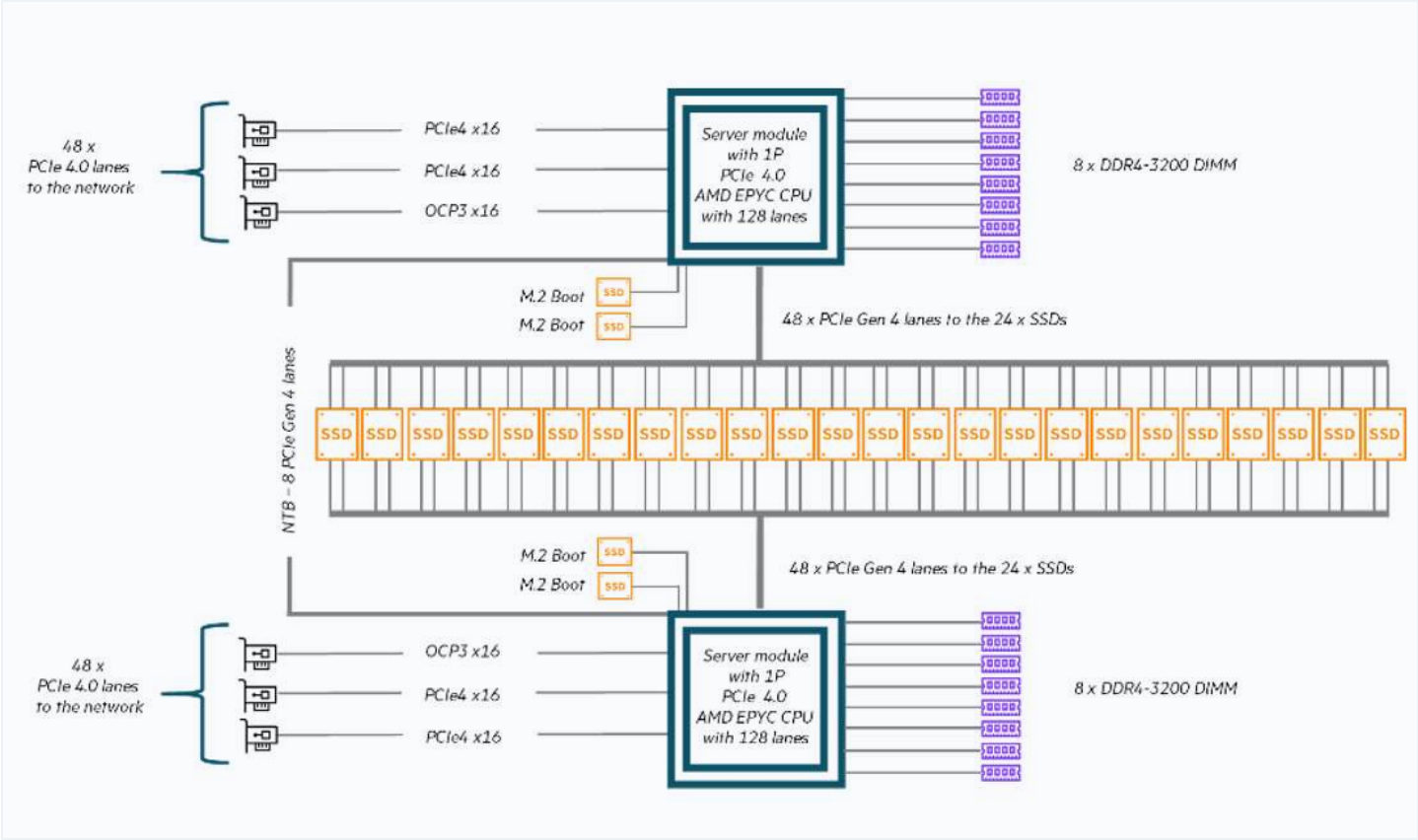

The data store intended for the needs of user jobs for the Supek cluster is HPE ClusterStor E1000.

The HPE ClusterStor E1000 storage architecture is based on a flexible hardware design that uses the latest storage technology including PCI Gen 4 NVMe flash SSD, HPE's networking technology Slingshot 200 Gbps very high density enclosures. This enables metadata-optimized storage based on high-performance NVMe flash to provide an optimal I/O path for workloads that have sequential I/O or high IOPS, or both. The solution enables independent scaling of performance and capacity.

The basic building element is based on a computer server node which, in addition to serving as the main node for storage capacity (Flash Scalable Storage Units, SSU-F), is also used as a server node for metadata (Metadata Management Unit, MDU) and a server node for management (System Management Unit, SMU). Each element contains 2 motherboards with one processor and 24 slots for NVMe SSD drives that are filled depending on the role of the element.

Schematic representation of the HPE ClusterStor E1000 system element

The solution consists of:

1x System Management Unit, SMU, 5x 1.6TB NVMe SSD

1x Metadata Management Unit, MDU, 24x 3.2TB NVMe SSD

6x Flash Scalable Storage Units, SSU-F, 24x 7.68TB NVMe SSD

2x Local Management Network switch, LMN

2x Slingshot switch, SS

1x Flash Scalable Storage Unit, SSU-F, 24x 7.68TB NVMe SSD with a total capacity of 184TB for local server CPU and GPU disk space