...

The specific implementation that makes up Supek is the HPE Cray EX2500 and consists of several servers:

| Purpose | Number | CPU | GPU | RAM (GB) |

|---|---|---|---|---|

| CPU access node | 1 | 2 x AMD EPYC 7763 | - | 256 |

| GPU access node | 1 | 1 x AMD EPYC 7763 | 1 x NVIDIA A100 (PCI) | 128 |

| CPU work node | 52 | 2 x AMD EPYC 7763 | - | 256 |

| GPU work node | 20 | 1 X AMD EPYC 7763 | 4 X NVIDIA A100 (SXM) | 512 |

| Big memory nodes | 2 | 2 X AMD EPYC 7763 | - | 4096 |

Access nodes

There are 2 access servers/nodes available on the Supek cluster.

...

Supek contains 2 HPE Proliant DL385 Gen 10 Plus v2 servers. Each of the servers is equipped with 2 AMD EPYC 7763 processors. The server motherboard contains 16 memory slots per processor and supports a transfer speed of 3200MT/s. 32 DDR4 memory modules with a capacity of 128GB of working memory provide each node with a total of 4096 GB of working memory. The server is equipped with a local NVMe SSD with a capacity of 1.92 TB

Interconnection

The processor and storage part of the Supek supercomputer are connected by the latest version of Cray's Slingshot interconnect, intended for typical workloads in HPC environments focused on processing and exchanging large amounts of data.

The main item in Slingshot's performance is the speed of 200 Gbps which, combined with the 64 ports available on each switch and the Dragonfly network topology, achieves exceptional transfer speeds.

In addition to high performance at the hardware level, the Slingshot interconnect optimizes network data transmission with adaptive packet routing (taking into account the load on other switches) and congestion control (by increasing or decreasing the bandwidth of traffic-generating servers).

Dragonfly network topology: Nodes (circles) are via switches (squares) connected in local groups (via electrical signals),

and groups of nodes via of global connections (optical cables) to the network (Source)

Database

The data store intended for the needs of user jobs for the Supek cluster is HPE ClusterStor E1000.

The HPE ClusterStor E1000 storage architecture is based on a flexible hardware design that uses the latest storage technology including PCI Gen 4 NVMe flash SSD, HPE's networking technology Slingshot 200 Gbps very high density enclosures. This enables metadata-optimized storage based on high-performance NVMe flash to provide an optimal I/O path for workloads that have sequential I/O or high IOPS, or both. The solution enables independent scaling of performance and capacity.

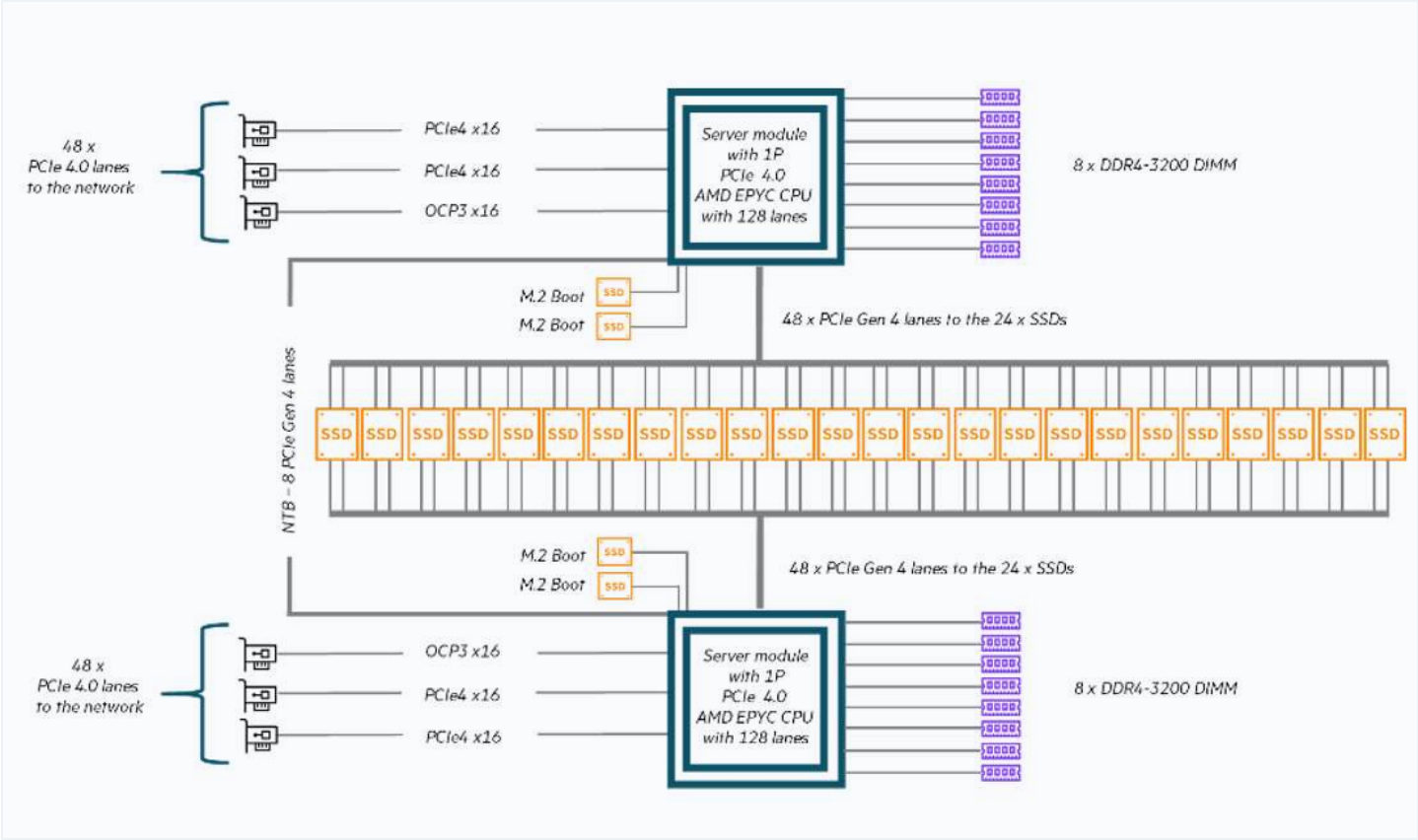

The basic building element is based on a computer server node which, in addition to serving as the main node for storage capacity (Flash Scalable Storage Units, SSU-F), is also used as a server node for metadata (Metadata Management Unit, MDU) and a server node for management (System Management Unit, SMU). Each element contains 2 motherboards with one processor and 24 slots for NVMe SSD drives that are filled depending on the role of the element.

Schematic representation of the HPE ClusterStor E1000 system element

The solution consists of:

1x System Management Unit, SMU, 5x 1.6TB NVMe SSD

1x Metadata Management Unit, MDU, 24x 3.2TB NVMe SSD

6x Flash Scalable Storage Units, SSU-F, 24x 7.68TB NVMe SSD

2x Local Management Network switch, LMN

2x Slingshot switch, SS

1x Flash Scalable Storage Unit, SSU-F, 24x 7.68TB NVMe SSD with a total capacity of 184TB for local server CPU and GPU disk space